What is the Benchmark Model Review?

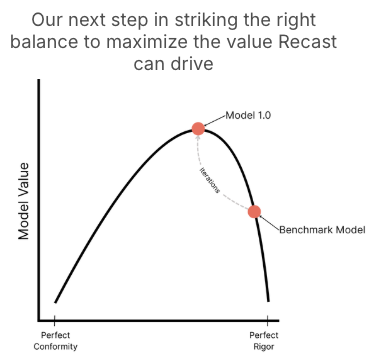

During the model build process, the Recast team will work to build the model that maximizes both internal validity and external validity as assessed by the Recast Model Checks. This means we end up with a model tuned toward theoretical rigor. However, a model that is purely focused on theoretical rigor could end up missing the mark on providing the most valuable model for you to use for your business. This review call is our first step in the direction of maximizing value, while still staying rooted in the modeling rigor for which you came to Recast.

How Benchmark Model Review Works

-

Live on a call, your Recast delivery team will walk you through:

-

The results of the Recast Model Checks

-

Model Inferences regarding:

-

Historical channel efficiency and contribution business

-

Historical organic contribution to your business

-

-

Results of a model’s forecast for the future

-

Results of model’s optimization for future spend

-

-

After the call, you and your team will be granted access to the Recast platform, so you can dig in further yourselves.

-

Homework: Your task coming out of this call is to identify the areas you see that do not align with the business context, and could ultimately make the model less actionable. We refer to these as “Gaps”

-

Follow-Up Meeting: Usually we allow for one week for you to determine these gaps before we have a follow-up meeting where you bring the gaps forward, we ingest them and gather any clarifications necessary.

How to Identify Gaps?

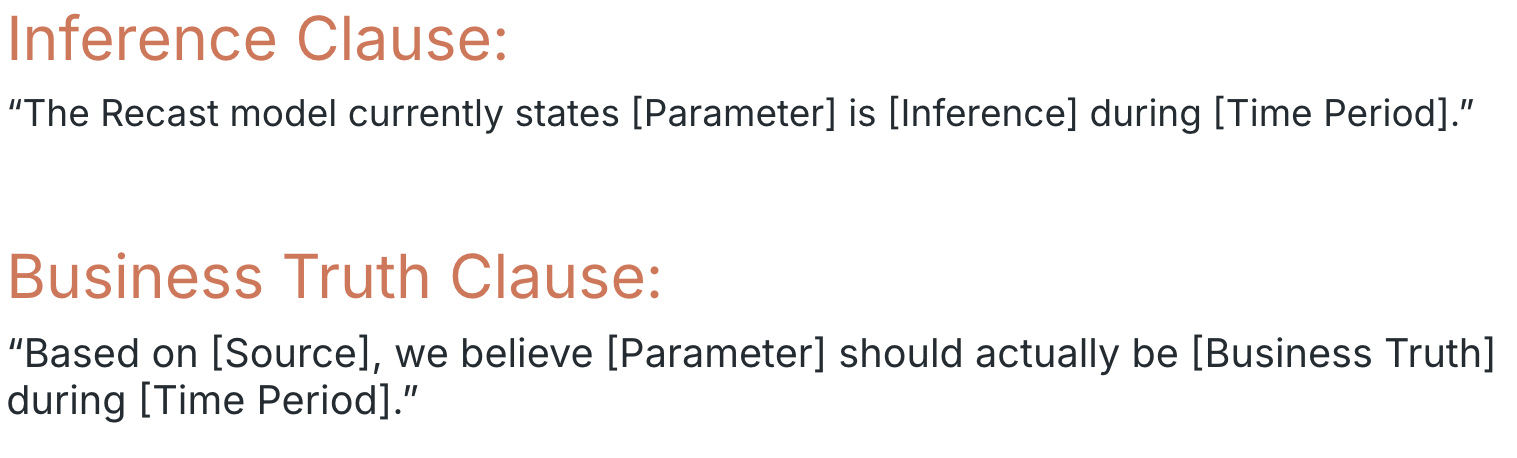

To ensure both parties are clear on the ask, we have a very specific structure for defining gaps. Gap statements should have 2 clauses:

Where should you start?

Please see our suggested Gap Identification Checklist below, which should give you starting points once you receive access to the dashboard.

Insights

In the app, review each of these reports and identify any of the model inferences that surprise you based on what you know to be true about your business.

Overview - Last 365 Days

-

Marketing Effectiveness

-

Do any channels appear to be more efficient than what makes sense?

-

Are there channels that are extremely certain or uncertain?

-

-

Direct Contribution by Channel

-

Do any individual channels dominate the marketing contribution?

-

Does the Baseline and Spikes contribution make sense over the last 365 days?

-

Baseline

-

Does the trend over time in the baseline make sense? Is it generally trending up or down?

-

Is there a seasonal component of your business that isn’t well represented in the baseline?

Spikes

-

Promotions

-

Are there major promotions that aren’t accounted for here?

-

Are there any promotions that have a bigger or smaller impact than you expected?

-

Are there any promotions that had the opposite impact of what you expected?

-

-

Anomalies

-

Are there any anomalies that aren’t accounted for here that should be?

-

Are there any anomalies that have a bigger or smaller impact than you expected?

-

Context Variables

-

For the context variables included, is the direction of the effect aligned to your expectations?

Experiments

-

For the experiments included, do they align closely enough with the results you got from them?

-

Do any of the channels an experiment was applied to seem to lose the effect of the experiment too quickly?

Plans

-

Open the “Default Plan”

-

Review the Spend Breakdown

-

Does it generally align with how you expect to spend?

-

The spend is estimated using Recast's "business as usual" approach that assumes your future spend will follow the same general pattern as your historical spend.

-

-

Review the Forecast Results

-

How does the Forecast Result compare to your goal for the period?

-

How does the Forecasted ROI compare to your expectations for marketing efficiency for the period?

-

-

-

Review the recommendations from the model. These are nudges to your Default Plan’s budget the model would currently recommend

-

How do these compare with how you currently think about opportunity across your channel mix?

-

Where the model is recommending you spend up, can you spend up?

-

Where the model is recommending you spend down, are there any reasons why you wouldn’t spend down on those channels?

-

Note: During the iteration phase, we will not focus on running experiments within the model. An experiment refers to exploring open-ended or hypothetical scenarios, such as testing new assumptions or evaluating potential changes that could impact model outputs. These types of exploratory analyses can be revisited with your Impact team after Acceptance.