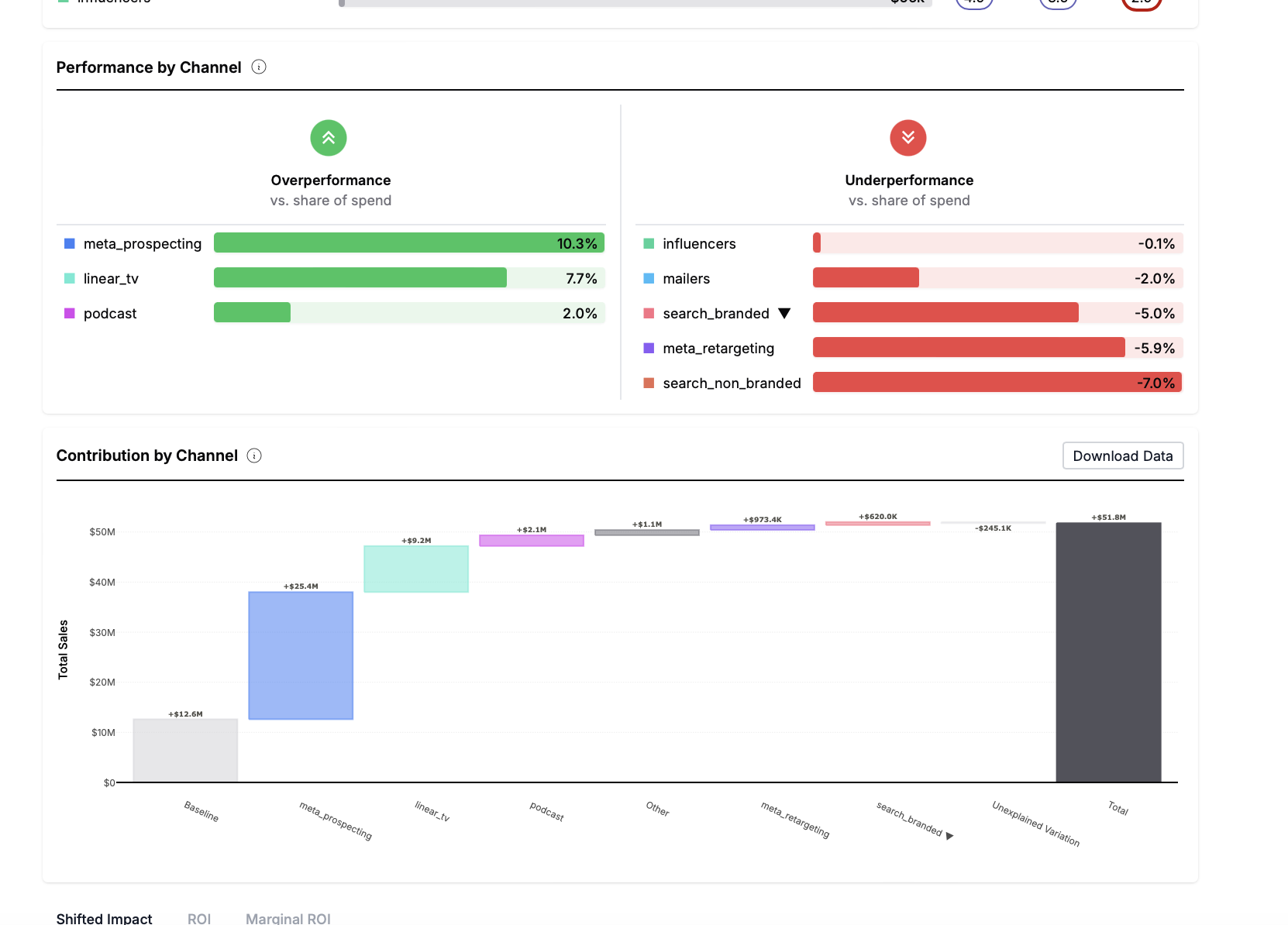

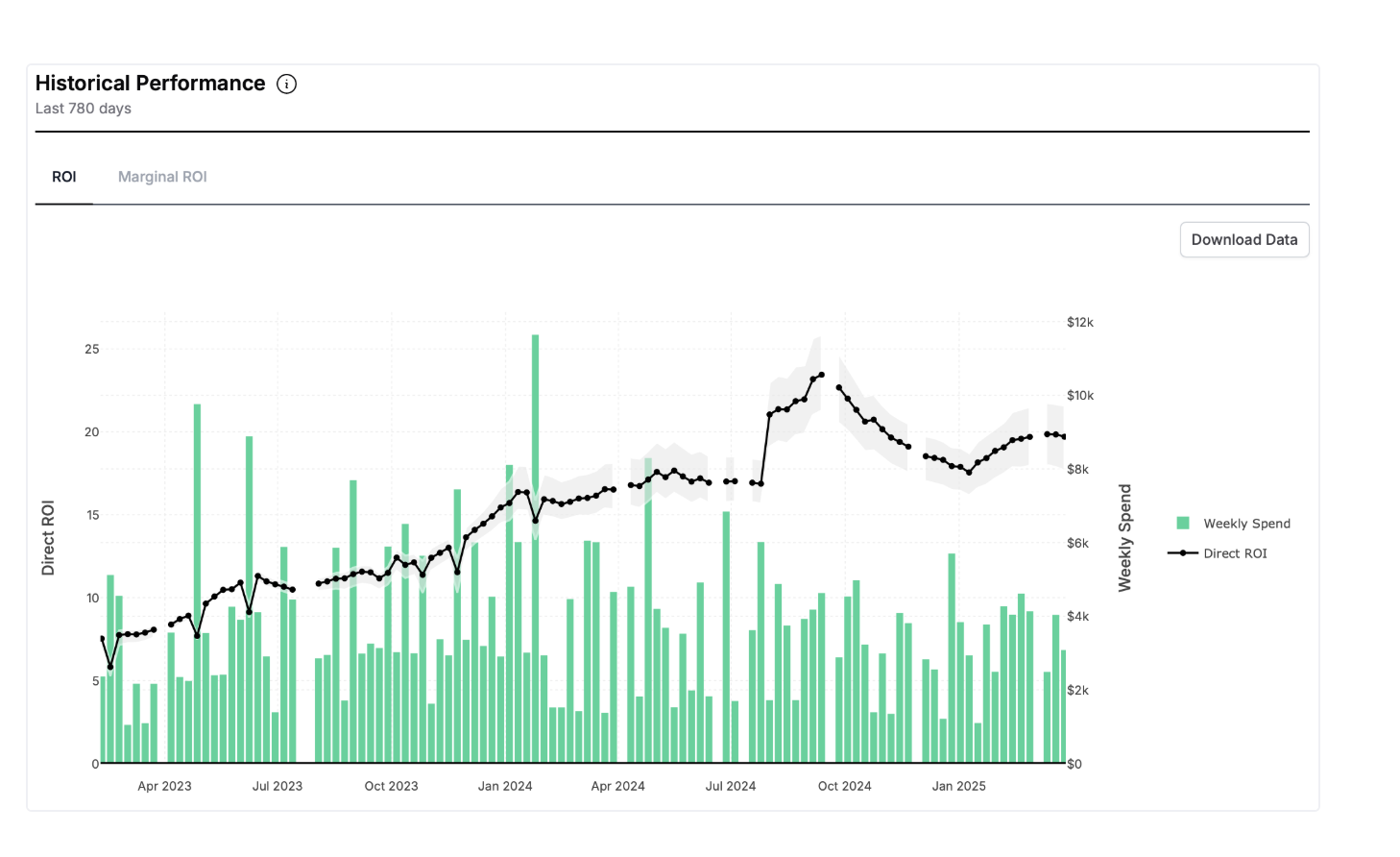

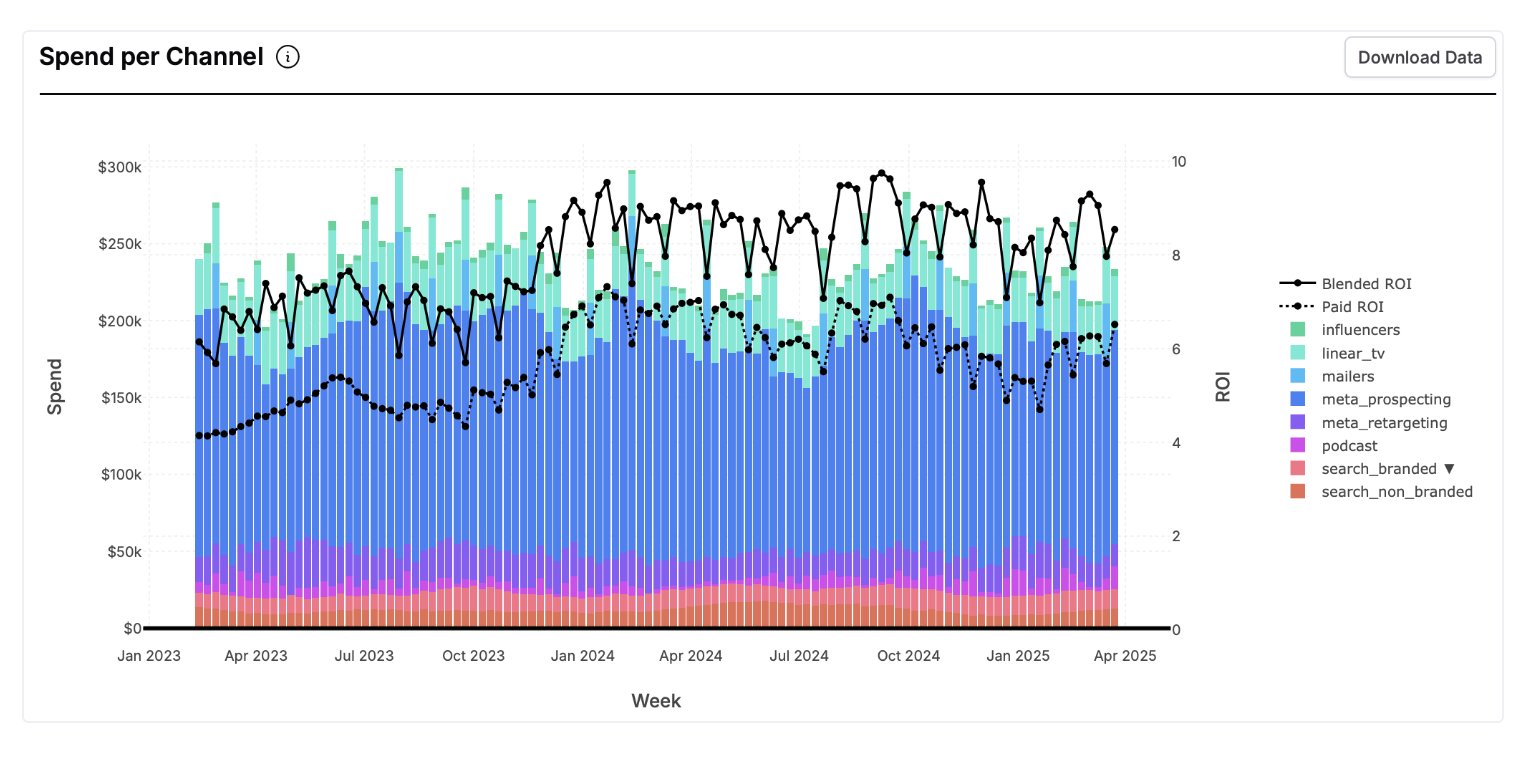

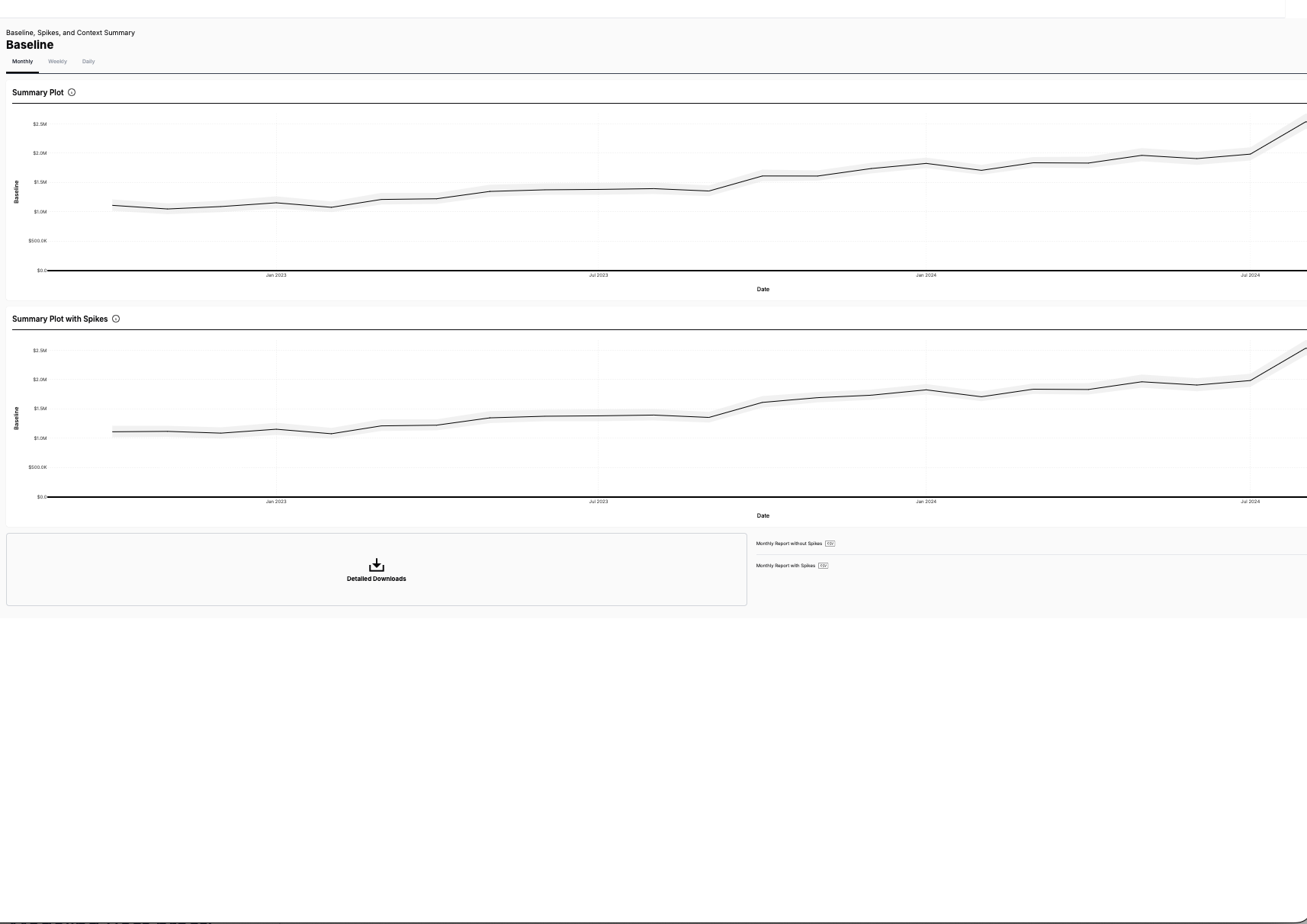

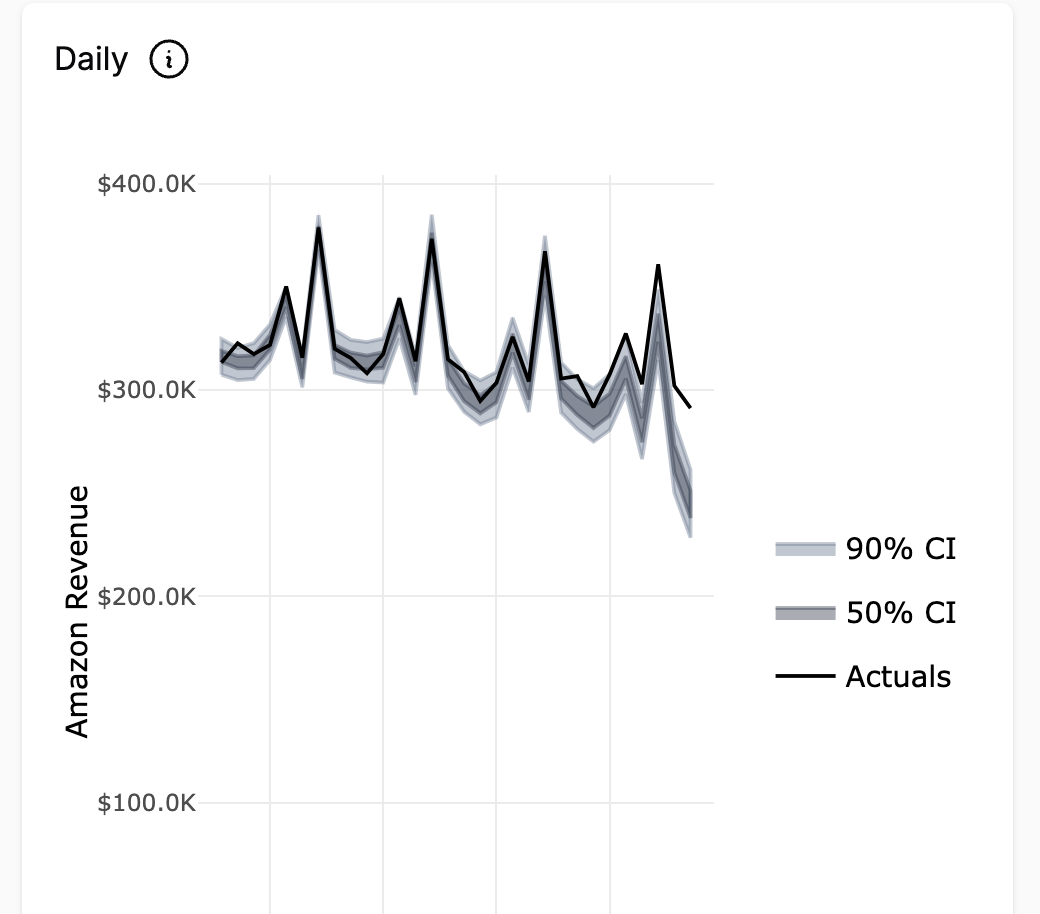

The first place many people start is on the Insights tab. The Insights tab tells us about what’s been happening in the past and shows the results of a statistical model trained on your company’s historical data.

Use the insights pages to understand how your marketing has been performing recently. Identify trends using the overview page, check in on your backtests and dive deep into your .